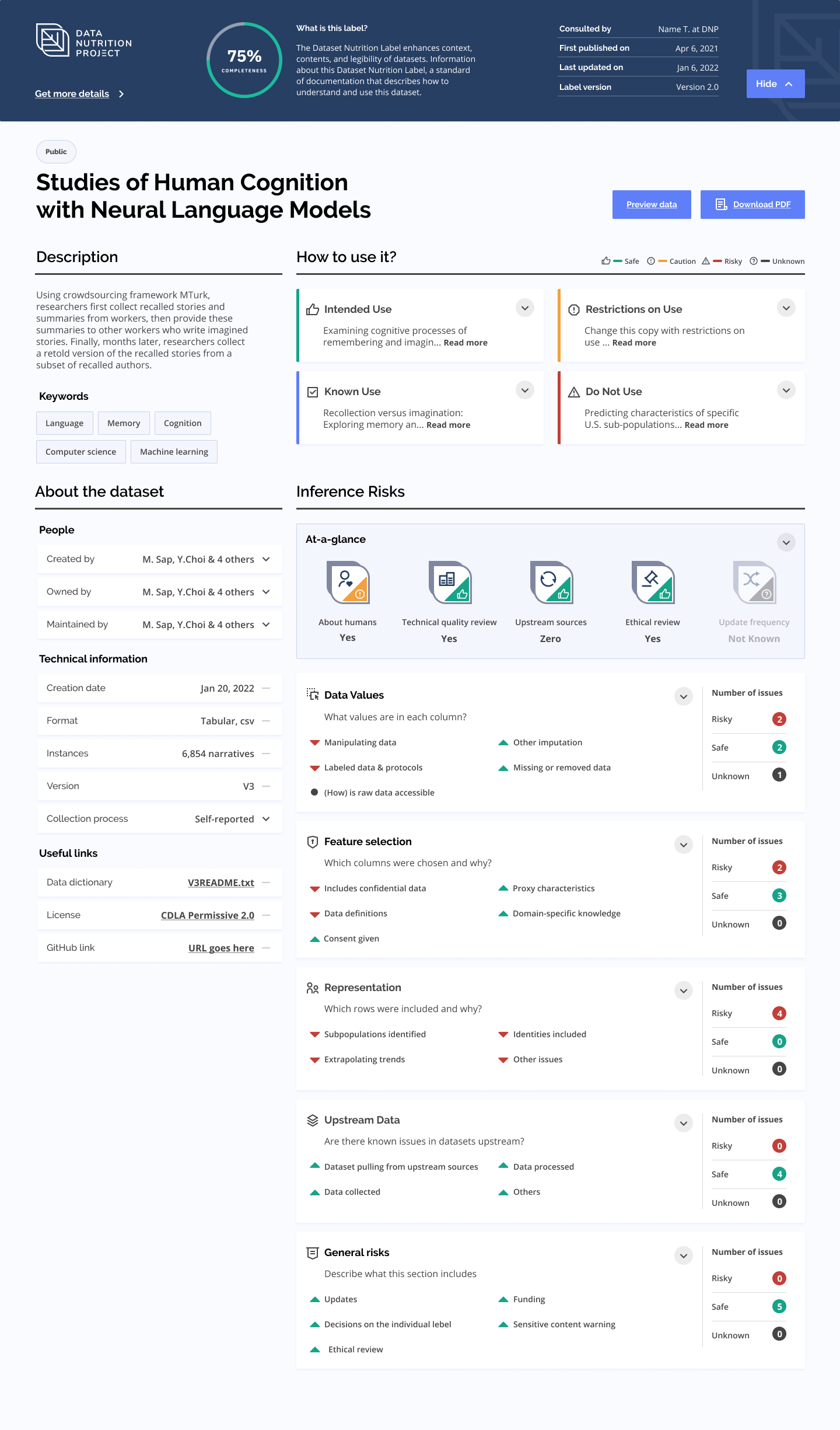

The Tool

A "nutrition label" for datasets.The Data Nutrition Project aims to create a standard label for interrogating datasets.

Our belief is that deeper transparency into dataset health can lead to better data decisions, which in turn lead to better AI.

Founded in 2018 through the Assembly Fellowship, The Data Nutrition Project takes inspiration from nutritional labels on food, aiming to build labels that highlight the key ingredients in a dataset such as metadata and demographic representation, as well as unique or anomalous features regarding distributions, missing data, and comparisons to other "ground truth" datasets.

Building off of the modular framework initially presented in our 2018 prototype and further refined in our 2nd Generation Label (2020), based on feedback from data scientists and dataset owners, we have further adjusted the Label to support a common user journey: a data scientist looking for a dataset with a particular purpose in mind. The third generation Dataset Nutrition Label now provides information about a dataset including its intended use and other known uses, the process of cleaning, managing, and curating that data, ethical and or technical reviews, the inclusion of subpopulations in the dataset, and a series of potential risks or limitations in the dataset. You may additionally want to read hereabout the second generation (2020) label, which informed the third generation label.